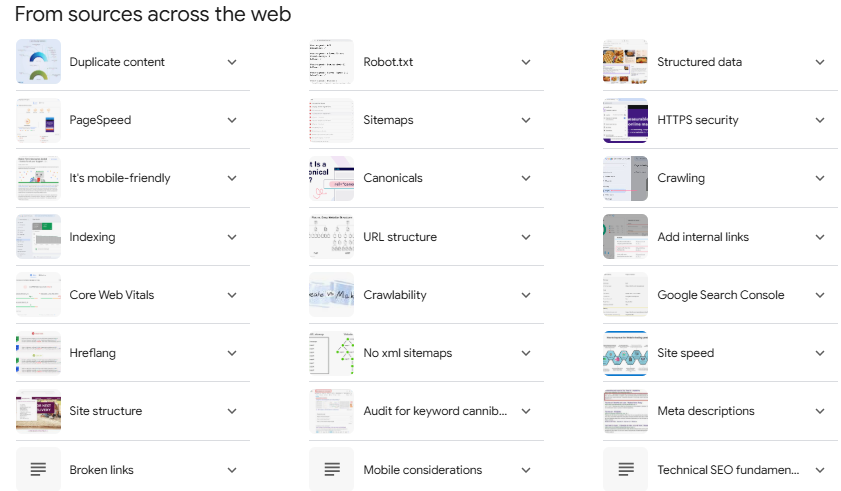

What is Technical SEO?

Why is Technical SEO Important for Search Engines?

Technical SEO is very important for search engines for a few key reasons:

- Helps Search Engines Find Pages: When your website is well-organized, search engines can easily find and look through all the important pages quickly.

- Improves Indexing: Fixing technical issues helps search engines list your content correctly, which increases the chances of it showing up in search results.

- Improves Website Performance: Technical SEO makes your website faster, safer, and easier to use on mobile devices—things that search engines care about when deciding how to rank your site.

- Helps Search Engines Use Their Time Well: By improving the technical setup of your site, you make sure search engines focus on your most important pages, helping them crawl and index more effectively.

- Clearer Content Signals: Using special code (like schema markup) helps search engines understand your content better, which could make your site stand out in search results with extra features.

- Better Mobile Experience: Since search engines now look at how mobile-friendly your site is, making sure it works well on phones is key for improving your ranking.

- Improves Security: Adding HTTPS to your website makes it secure, and search engines give higher rankings to safe sites.

- Avoids Duplicate Content: Fixing issues with duplicate content helps search engines understand which version of a page to rank, avoiding confusion and improving your search visibility.

When you fix these technical parts, your website becomes easier and friendly for search engines to crawl, understand, and rank, helping it show up higher in search results.

Free Technical SEO Checklist Sheet

Get your free Technical SEO checklist and boost your website’s search rankings today!

Click here to download your simple, easy-to-use SEO guide and improve your website’s performance.

How Important in Technical SEO for a website to rank on search engine

When I mentioned Technical SEO as the foundation of good website visibility, what I was simply saying is that you can’t start a building without a good foundation.

For your website to rank well, first your Technical SEO needs to be done correctly. It’s the foundation that helps your website appear and perform better in search results. If you don’t get it right, it affects the following:

- Crawlability and Indexability: Technical SEO helps search engine bots easily explore your website and index its content, making sure it can be found in search results.

- Ranking Factors: Many technical aspects directly affect how well your website ranks, including:

- Page Speed: Faster websites tend to rank higher and give a better experience to users.

- Mobile-Friendliness: With mobile-first indexing, it’s crucial to have a website that works well on phones and tablets to rank well across all devices.

- Security: Adding HTTPS to your website makes it secure and can help improve your ranking.

- User Experience: Things like fast loading times and a mobile-friendly design improve user experience, which is a key factor for search engine rankings.

- Crawl Budget Efficiency: By improving your site’s technical setup, you help search engines focus on crawling and indexing your most important pages, using their time more effectively.

- Structured Data: Using structured data (special code) helps search engines understand your content better, which can make your website show up in search results with extra features, like rich snippets.

- Site Architecture: A clear and organized website structure, with good internal links, helps search engines understand how your content is related and which pages are most important.

Without good technical SEO, even great content may struggle to rank well. Technical SEO provides the foundation that helps other SEO efforts, like content and backlinks, make a bigger impact on your site’s visibility in search results.

What is Crawlability in SEO?

Crawlability is how easily search engine bots, like Googlebot, can access and explore your website. If your website is good, these bots will be able to find, analyze, and index your content on your page.

If you don’t see what you have published getting noticed, the first aspect of your search should be to check this. Ask yourself if search engines can detect contents on your page without stress.

Why Crawlability in SEO is Important?

- Helps Search Engines Find Content: Crawlability makes it easier for search engines to discover your pages.

- Necessary for Ranking: Without crawlability, your pages might not get indexed or ranked in search results.

- Ensures Visibility: Poor crawlability can hide great content from search engines.

A site with good crawlability allows search engines to discover new pages, see updates, understand structure, and evaluate relevance.

How to Test Crawlability

What is Indexability?

Indexability is a website’s ability to be discovered, crawled, and added to a search engine’s database, making it possible for the page to appear in search results. For a page to be indexable, search engines must be able to:

- Discover the page

- Access its content

- Understand the information on the page

Only indexable pages can show up in search results. If a page isn’t indexable, it won’t appear in search results, no matter how good the content is.

How to check if your content page indexed

To check if your pages are indexable, use these tools:

- Google Search Console: Use the “URL Inspection Tool” to check if pages are crawlable and indexable.

- Screaming Frog SEO Spider: This tool checks crawlability and indexability by scanning your site like a search engine.

- Ahrefs Site Audit: This tool examines your site’s SEO health and highlights indexability issues.

- SEMrush Site Audit: Helps find indexability problems and improve site health.

- Sitebulb: Provides an indexability check report to identify setup issues.

- Chrome Extensions: Tools like Indexsor provide quick checks of a page’s indexability directly in your browser.

When checking indexability, look for:

- Meta Robots Tags

- Robots.txt File Directives

- Canonical Tags

- Server Response Codes

- Page Load Speed

Make sure your web pages can be found in search results.

You can check this by typing “site:[your website]” in Google or Bing’s search bar. Sometimes a page might appear in one search engine but not another.

If search engines can’t find your pages, they won’t show up when people search for them.

Keep your website easy for Google to read by having a clear site map and removing any blocks that might stop Google from seeing your content.

How to fix pages that are not indexed?

If a page is not indexed, follow these steps to fix the issue:

- Check for Technical Issues:

- Ensure the page isn’t blocked by the robots.txt file.

- Verify that there’s no “noindex” tag in the page’s meta robots tag.

- Check for proper HTTP status codes (avoid errors like 4xx or 5xx).

- Review Content Quality:

- Make sure the page has unique, high-quality content that matches what users are searching for.

- Check for duplicate content on your site.

- Improve Internal Linking:

- Create a strong internal linking structure to help search engines discover and understand the page’s importance.

- Verify Crawl Budget:

- Check if your site has crawl budget issues that may prevent indexing important pages.

- Use Google Search Console:

- Submit the URL for indexing using the URL Inspection Tool.

- Review the Coverage report to spot specific indexing issues.

- Optimize Page Performance:

- Improve page load speed and mobile-friendliness, as these affect indexing.

- Build Quality Backlinks:

- Get relevant, high-quality backlinks to signal the page’s importance to search engines.

If the problem continues, consider running a full technical SEO audit to find any deeper issues affecting your site’s indexability.

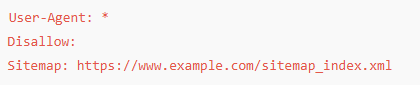

What is a Robots.txt File?

A robots.txt file is a plain text file located in the root directory of a website. The robots.txt file, also known as the Robots Exclusion Protocol, serves as a set of guidelines for search engine bots.

It tells search engine bots which pages to crawl and index, and which to ignore.

This file is an important part of technical SEO as it allows website owners to control how search engines interact with their site.

Point to Note:

- It’s located at the root of a domain (e.g., yourwebsite.com/robots.txt).

- It uses simple rules to guide search engine bots (user-agents).

- While not an official standard, major search engines follow its rules.

- It helps manage crawling access, limit indexing, and control crawling speeds.

A basic robots.txt file might look like this:

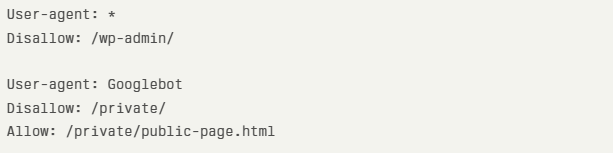

If a section or page is disallowed, you will see this. From the image below, the folder ‘private’ is kept out of reach.

Common Robots.txt Errors to Avoid:

- Incorrect File Location: The robots.txt file must be in the root directory of the website.

- Poor Use of Wildcards: Be careful when using * (asterisks) or $ (dollar signs), as they can block or allow too much content.

- Using “noindex” in Robots.txt: The “noindex” directive doesn’t work in robots.txt and can cause indexing problems.

- Blocking Essential Resources: Don’t block important resources like scripts or stylesheets needed to properly display your pages.

- Missing Sitemap URL: Include your sitemap URL in the robots.txt file to help search engines find your content faster.

- Case Sensitivity Issues: Robots.txt is case-sensitive. “Disallow: /Folder” won’t block “/folder”.

- Using Relative URLs for Sitemaps: Always use full (absolute) URLs for the sitemap location in the robots.txt file.

- One Robots.txt File for Multiple Subdomains: Each subdomain needs its own robots.txt file.

Avoid these mistakes and configure your robots.txt file correctly to help search engines better understand your website.

How to Create and Submit an XML Sitemap

- Generate the Sitemap:

- Use a sitemap generator tool or plugin (e.g., All in One SEO for WordPress).

- Or, manually create it using PHP code if preferred.

- Ensure Proper Formatting:

- Follow correct XML syntax.

- Include essential tags like

<urlset>,<url>, and<loc>. - Keep the file under 50MB and limit it to 50,000 URLs.

- Submit the Sitemap:

- Sign in to Google Search Console.

- Go to the Sitemaps section.

- Enter the sitemap URL (e.g., domain.com/sitemap.xml).

- Click Submit.

- Add Sitemap to Robots.txt:

- Include the sitemap URL in your robots.txt file to help search engines discover it faster.

Common XML Sitemap Mistakes to Avoid

- Including Non-Indexable Pages:

- Don’t list URLs with ‘noindex’ tags or blocked by robots.txt.

- Ignoring XML Syntax Rules:

- Validate your sitemap with a sitemap validator tool and make sure it follows the correct protocol.

- Using Incorrect URL Formats:

- Ensure URLs are properly formatted and canonical tags point to the correct URLs.

- Forgetting to Update the Sitemap:

- Regularly update your sitemap as your website content changes.

- Overusing Sitemap Priority:

- Use the priority tags sparingly and only when appropriate.

- Not Using Sitemap Index for Large Sites:

- For sites with multiple sitemaps, use a sitemap index file.

- Neglecting to Monitor Sitemap Performance:

- Regularly check your sitemap status in Google Search Console.

By following these guidelines and avoiding these mistakes, you can ensure your XML sitemap helps search engines crawl and index your site effectively, improving your SEO performance.

Website Architecture

Website architecture is like the blueprint or map of your website. It decides how everything is organized and connected, making it easier for both visitors and search engines to find and understand the content.

Think of it as designing a building: the layout, hallways, rooms, and how they all connect make it easy for people to move around and find what they need.

Key Parts of Website Architecture:

- Site Hierarchy: This is how pages are arranged, from the homepage down to categories and individual pages. It’s like how a building has a main entrance, then leads to different rooms or floors.

- Navigation: Navigation is like the signs in a building guiding you where to go. It includes menus and internal links that help users move from one page to another on your site.

- URL Structure: URLs (web addresses) should match the site’s organization, making them easy to read and understand. Just like floor numbers and room names help people navigate a building, clear URLs help users and search engines understand where they are on your site.

- Content Organization: Grouping related information together logically. This is like arranging items in a store or rooms in a house so that similar things are near each other.

- Internal Linking: Internal links are like doorways connecting related rooms. They help users find related pages and help search engines understand how your content is related.

Why Website Structure Matters:

- Helps Search Engines Understand Your Content: Just like workers in a store need a map to understand where each item is, search engines need a clear structure to understand what’s on your website and how it all connects. This helps them show your site to people searching for related topics.

- Makes Navigation Easier for Users: A well-organized website is like a store with clear aisles and signs. It makes it easy for visitors to find what they need without getting lost.

- Boosts Your Site’s Authority: When your content is organized, it shows you’re an expert on certain topics, which can help your site show up higher in search results.

See your website like a big modern store with lots of different aisles and shelves.

If everything is arranged in a neat, logical way, both customers (your visitors) and workers (search engines) can easily find what they’re looking for.

But if everything is jumbled, people will struggle to find what they want, and the workers won’t be able to organize it properly.

Tips for a Better Website Structure:

- Group Similar Content: Think of creating categories (like sections in a store) where similar pages or topics are grouped together. This helps both users and search engines.

- Simple and Clear Menus: Your main menu should only have a few categories (around 7 or 8). Use clear labels so visitors know exactly what each one is.

- Easy Navigation: Visitors should be able to reach any page on your site within three clicks. This keeps things simple and quick.

- Internal Links: Just like a store might have signs pointing to related sections, you should link pages on your site to each other, helping people discover more and giving search engines a clearer idea of how pages are connected.

- Descriptive URLs: URLs (the web addresses) should be simple and tell people what the page is about. This helps visitors and search engines alike.

- Breadcrumbs: Breadcrumbs are like a trail showing visitors where they are on your site. They help people understand how to get back to where they started.

- Proper Headings: Use headings (like H1, H2, and H3) to break up your content. This makes your content easier to read and also helps search engines know what’s most important.

- Sitemaps: Create a map of your site and send it to search engines. This makes it easier for them to “crawl” your pages and index them faster.

- Avoid Isolated Pages: Every page should be linked to something else. If a page is “orphaned” (not connected to anything), it’s harder for both visitors and search engines to find.

- Use a Hierarchical Structure: A clear, organized structure works best for most websites and helps both users and search engines understand the importance of each page.

By organizing your website in a logical, easy-to-navigate way, you’ll make it better for both users and search engines, which can help your site perform better overall.

Fixing Common Technical Issues

Broken Links

Broken links, which lead to 404 errors (pages that can’t be found), can hurt both the experience of visitors and your website’s performance in search engines. It’s like if a store has broken doors that don’t open or signs pointing to empty shelves — it frustrates customers and gives the impression that the store isn’t well taken care of.

How Broken Links Impact SEO:

- Lower Quality: A lot of broken links make Google think your website is outdated or poorly maintained, which can lower its overall quality in their eyes.

- Waste Crawl Budget: Search engines have a limited amount of time and resources to visit your site. Broken links use up this time, preventing them from indexing other important pages on your site.

- Lost SEO Power: When you link to a page that’s broken, any “authority” or value from that link gets wasted. It doesn’t help improve your SEO.

- Higher Bounce Rates: If users click a link and end up on a broken page, they’re likely to leave the site quickly, which negatively impacts how engaging your site is.

- Lower Search Rankings: If Google finds broken links on your pages, it may rank those pages lower in search results.

- Lost Link Power: If external websites link to a broken page on your site, you lose the SEO benefits of those backlinks, which help your site rank better.

Tools to Find Broken Links:

- Screaming Frog SEO Spider: This tool scans your site and finds broken links, giving you detailed reports on where they are.

- Google Search Console: Google’s own tool helps you identify crawl errors, including broken links.

- Broken Link Checker Plugin: For WordPress sites, this plugin scans and helps fix broken links.

- Xenu Link Sleuth: A free tool that checks for broken links on your site.

- W3C Link Checker: Validates links and highlights any broken ones.

- Atomseo Broken Link Checker: A free web-based tool that scans and reports on broken links across your website.

By regularly checking for and fixing broken links, you can improve your site’s user experience, keep your SEO efforts strong, and help your pages rank higher in search engine results.

301 Redirect

A 301 redirect is like forwarding your mail when you move to a new address. It automatically sends visitors and search engines from an old web address (URL) to a new one. It tells both people and search engines that the page has permanently moved to a new location.

Key Points About 301 Redirects:

- Transfer SEO Value: When you use a 301 redirect, it passes the “link authority” from the old page to the new one. This helps keep your search engine rankings intact.

- Maintains SEO Rankings: If you change a page’s URL, a 301 redirect ensures that your SEO value is preserved, and search engines know where the page is now.

- The “301”: This is the code that your server sends to indicate a permanent move. It’s like a stamp on your mail forwarding request.

How to Implement and Manage Redirects Effectively:

Use SEO Plugins (for WordPress):

- All in One SEO (AIOSEO): This plugin has a built-in redirect manager for easy setup.

- Redirection Plugin: Another plugin that makes it simple to create and manage redirects.

Edit the .htaccess File:

- This file is in your site’s root folder and allows you to set up redirects directly on your server.

- Add redirect rules that tell the server how to handle the move.

Use Server-Side Scripting:

- If you’re familiar with programming, you can use PHP to set up redirects on your site.

Best Practices for Redirects:

- Redirect to Relevant Pages: When you’re redirecting, make sure the old page links to a similar, relevant page so that users and search engines don’t get confused.

- Use 301 for Permanent Moves: Only use 301 redirects if the move is permanent. For temporary changes, use a 302 redirect.

- Monitor Redirects Regularly: Check that your redirects are still working properly and avoid “redirect chains” (where one redirect leads to another) or loops (where redirects go back to the original page).

- Use Redirects During Site Changes: When redesigning your site or moving pages, 301 redirects help you maintain your SEO value and ensure users can still find your content.

Tools to Manage Redirects:

- Google Search Console: Helps monitor any crawl errors or issues with redirects.

- Screaming Frog SEO Spider: Identifies broken links and can help you find pages that need redirects.

By setting up 301 redirects correctly, you ensure that visitors and search engines can find your content, keep your SEO rankings, and avoid frustration when pages move to new locations.

Canonical Tags

Canonical tags are like telling search engines which version of a webpage is the “official” one when there are multiple pages with similar or duplicate content. This helps prevent SEO problems that can arise from having identical content spread across different pages on your site.

Key Points About Canonical Tags:

- Location: Canonical tags are placed in the

<head>section of a webpage’s HTML code, which is part of the webpage that holds important meta-information. - Format: The tag looks like this:

<link rel="canonical" href="https://example.com/preferred-url-here/" />. It tells search engines which page to prioritize. - Consolidates Link Value: Canonical tags combine the “link equity” (or SEO power) of duplicate pages, helping search engines focus on the most important page.

How to Use Canonical Tags Correctly:

- Identify the Preferred Page: Choose which version of a page should be the main one. This is usually the most comprehensive or original version.

- Add the Canonical Tag: On all pages with similar content, add the canonical tag in the

<head>section, pointing to the preferred page. - Self-Referencing Canonicals: Even on the preferred page itself, add a canonical tag that points back to its own URL. This makes it clear that this page is the main one.

- Apply Across All Similar Pages: Every duplicate or similar page should have the canonical tag pointing to the main version of the content.

- Use Full URLs: Always include the complete URL (e.g., “https://www.example.com”) in the canonical tag to avoid confusion.

Ways to Implement Canonical Tags:

- Edit HTML Directly: If you’re familiar with coding, you can add the canonical tag to the HTML of your pages.

- Use SEO Plugins: For WordPress, plugins like Yoast SEO can automatically handle canonical tags for you.

- Server-Side Scripting: If you’re using custom code, you can implement canonical tags through server-side scripts.

Monitor and Maintain:

It’s important to regularly check your canonical tags to make sure they’re working correctly. Tools like Google Search Console or SEO audit software can help you spot any problems.

By properly using canonical tags, you can avoid SEO issues caused by duplicate content, help search engines crawl your site more efficiently, and boost the rankings of your preferred pages.

Mobile optimization

Mobile optimization is essential for making sure your website performs well in search rankings and offers a smooth experience for visitors using smartphones or tablets. With the majority of internet users browsing on mobile devices, it’s crucial for your site to be mobile-friendly.

Why Mobile Optimization is Important for SEO:

- Mobile Traffic Dominates: More than half of all web traffic now comes from mobile devices, so if your site isn’t optimized for mobile, you’re missing out on a huge audience.

- Google’s Mobile-First Indexing: Google now looks at the mobile version of your website first when deciding how to rank your site. If your mobile site isn’t up to par, it can hurt your rankings, even for desktop users.

- Better User Experience: Mobile-friendly websites load faster, are easier to navigate, and adjust to different screen sizes. This leads to lower bounce rates (fewer people leaving your site quickly) and a better experience for users.

- Local Search Performance: A large number of local searches on mobile result in people visiting a business within the same day. If your site isn’t optimized for mobile, you could lose out on valuable local customers.

- Higher Conversion Rates: Sites that are well-optimized for mobile lead to more successful transactions (like sales or sign-ups) because users have a smoother experience.

How to Test Your Website’s Mobile-Friendliness:

- Google’s Mobile-Friendly Test: This official Google tool checks if your website is mobile-friendly by their standards and provides feedback.

- Google Search Console: Use the “Mobile Usability” report to find and fix any specific mobile issues across your site.

- Screaming Frog SEO Spider: This tool scans your site for mobile usability issues on a large scale, helping you identify any areas that need attention.

- Browser Developer Tools: Both Chrome and Firefox have mobile emulators that allow you to see how your site looks on different devices and screen sizes.

- Third-Party Tools:

- EXPERTE.com Bulk Mobile-Friendly Test: This tool checks up to 500 pages at once for mobile-friendliness.

- SE Ranking’s Mobile-Friendly Site Test: It tests both technical and usability aspects of your mobile site and offers suggestions for improvement.

- Lighthouse: This Google tool assesses how well your site performs on mobile in terms of speed, accessibility, and SEO.

- Manual Testing: Try using different mobile devices yourself to see how well your website performs. Testing with various screen sizes can give you insight into how users experience your site.

By regularly testing and optimizing your website for mobile, you can improve both your search engine rankings and user satisfaction, ensuring your site is ready for the majority of online traffic.